An email send on 25 October 2006 at 5:10 P.M. to the Admissions board

As I look towards updating the mathematics entrance test, the natural question which arises is to the extent to which the spring 2006 run properly placed students. As I am chair only of the national site division, I looked only students placed here.

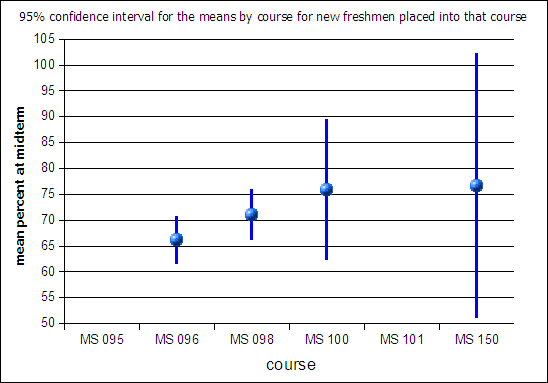

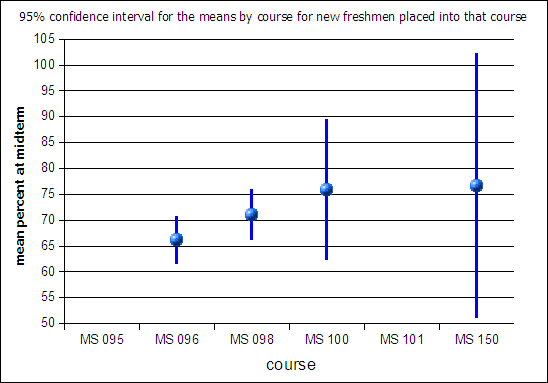

The preliminary results below utilize midterm averages. Individual

students succeed or fail for very individual reasons. At the level of

the individual student no statistical conclusions can be drawn, hence

the choice to move to a group average. To reflect the inherent

uncertainty in these results, I also include a 95% confidence interval

for the mean.

Course by course, with some data still out, the initial results look

fairly promising. On average students are passing the courses into

which they were placed. In the following diagram the courses are on the

x-axis, the means on the y-axis. The blue balls in the center of the

vertical bars are the mean for the new freshmen and only the new

freshmen placed into that particular course. MS 100 had a small n,

hence the large confidence interval.

Although MS 098 is slightly lower than MS 096 and MS 100, the

differences are not statistically significant and the 73% average in MS

098 is still a passing average.

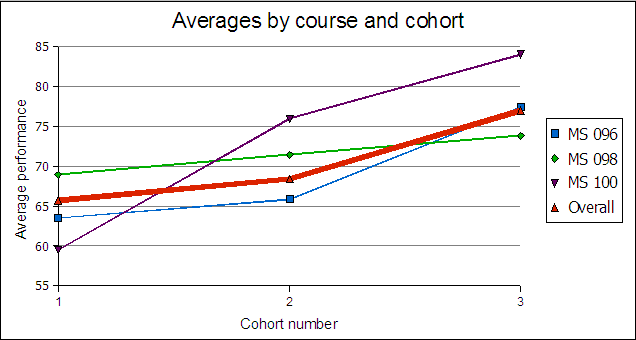

When I ran cross-tabs against the cohort, I noticed an apparent trend

with English cohort in each course. The following chart notes this

trend, course by course. Bear in mind 1 is the lowest English cohort

and 3 is the highest English cohort:

This data shows a strong increase in the mean midterm percentage by

cohort for the new freshmen. An increase of one in the cohort is worth

a rise of five in the midterm average. This suggests English skills, or

the lack thereof, are impacting the students in the math courses.

Although the underlying n is small, anecdotally there is the suggestion

that a student in cohort one should not be placed into MS 100.

For me the take-home message is that by-and-large the entrance math

test, which is the placement test, worked fairly well. Any update

should tweak but not substantively change the instrument. For what the

college wants the instrument to do, the instrument is performing well.

I look forward to having the test moved to optical character mark

reader paper so that item analysis might also be done in the future.

Data is still outstanding for a couple of sections, if I get updated

data I will share that with the group. The underlying spreadsheet is

available upon request, with the proviso that FRPA restrictions apply

to the non-anonymous, non-aggregated data.